Watch: Nail-biting video shows Tesla ‘Full Self Driving’ hesitation

Tesla is coming off one of its best years to date, what with its meteoric stock price rise, the introduction of the Model Y, and updates to the Model 3, S, and X.

But, ongoing debates around Tesla Autopilot and Full Self Driving (FSD) continued to overshadow the American brand’s positive press. The brand’s FSD beta rolled out to owners interested in opting in to the technology in October last year, with more getting their hands on it earlier this month.

Testing of the FSD beta has shown mixed results so far. Some videos show the technology performing adequately, if maybe lacking some of a human driver’s intuition. Other videos, meanwhile, have shown it looking out of its depth and not seeing other cars.

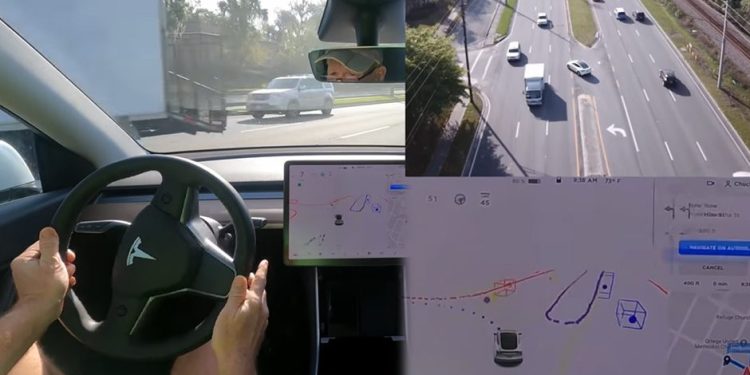

Perhaps one of the most anxiety inducing and fascinating videos of the system in action is this one, uploaded last week by YouTuber and Tesla Model Y owner Chuck Cook.

Cook positioned his FSD-equipped Model Y with a rather challenging task; an ‘unprotected’ left-turn through a busy three-lane road. Each attempt was documented from numerous angles, including one view from the driver’s perspective, one close-up of the main screen’s FSD menu showing ‘what the car sees’, and an overhead drone shot.

Across 10 minutes of attempts, two were particularly spooky. In one instance, the Model Y’s FSD appears to commit to crossing traffic while a car in the middle lane is mere metres away. Without Cook’s intervention with the brake pedal, a collision would have occured.

One of the other attempts sees the Model Y start creeping forwards to turn when a huge opening in traffic emerges. But, it takes so long to commit to the turn that by the time it does so, traffic has arrived and Cook again has to intervene.

It’s worth noting that the FSD is likely to be somewhat conservative in this instance because the Tesla is turning onto a low-speed residential road. But, the hesitance in the system is still worth documenting and questioning.

The trial technology presents a moral dilemma within the motoring community. Those testing FSD sign a waiver explaining that the technology is flawed and in its infancy, and within Tesla and autonomous circles those testing FSD in this raw, early phase are seen as pioneers helping hone and teach the system improved traits.

However, questions continue to be asked around whether it’s responsible to issue and test a system that’s still in development on the same roads as commuters who have not signed similar safety waivers.